How Distributed Tracing Solves One of the Worst Microservices Problems

Distributed Tracing Solves Some Big Pain Points with Microservices

Historically most web applications were developed as monolithic architectures. The entire application shipped as a single process implemented on a single runtime. Ultimately this architectural choice makes scaling software development extremely painstaking and tedious, because 100% of code changes submitted by members of any development team target a single code base. Under monolithic designs deployments are executed as “all or nothing” affairs - either you deploy all parts of your application at once or you deploy none of them.

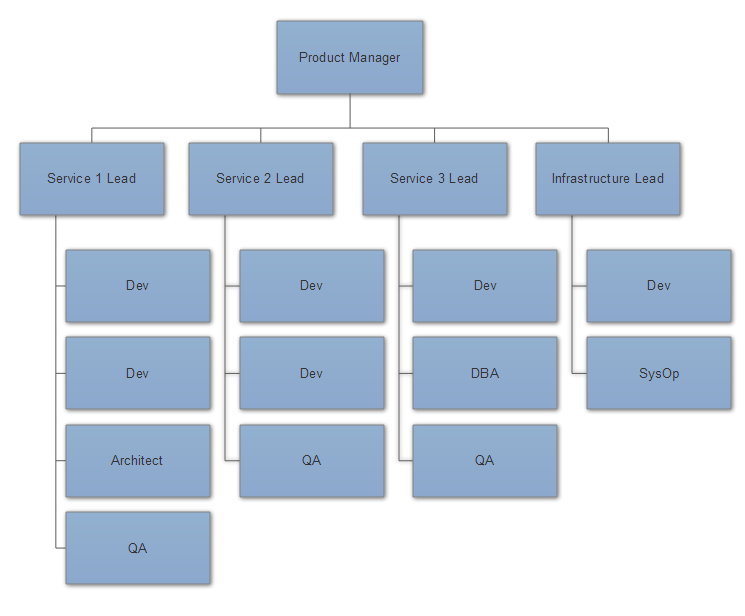

It’s for painful reasons like this that the industry is moving away from monoliths and towards distributed architectures such as microservices. What microservices provide isn’t product scalability; they provide people scalability - the ability to easily partition your software development organization along the same lines as you partition your application with service boundaries.

DevOps Implications of Microservices

Microservices provide enormous agility and flexibility to software development organizations. By partitioning our large applications into interdependent services which communicate via explicit network communication contracts, each team encapsulates their implementation from the others. This makes it possible, in theory, for each team to choose the best tools for the job - if Service 1’s requirements are best satisfied using Node.JS and Redis but Service 2’s are better handled with .NET and SQL Server, both of these teams can make those choices and develop / deploy their services independently of each other.

In practice, microservices are really a trade off for one set of organizational and technical problems for others. While the benefits of microservices amount to greater independence; clearer organizational boundaries and division of labor; and greater agility those benefits come with some distinct costs:

- Loss of coherence - now that the work to fulfill a single end-user request is now broken...