Model Context Protocol, Without the Hype

MCP is very useful, but it's not curing cancer. Here's why you should use it.

10 minutes to readWe haven’t talked that much about AI and LLM-driven development here at Petabridge, aside from a webinar we ran a year ago, but we’ve been using it heavily on our day jobs:

- Creating the visuals for “Why Learn Akka.NET?”;

- Doing the CSS and Jekyll auto-layout for Akka.NET Bootcamp 2.0;

- Transforming Incrementalist into a significantly more powerful .NET build tool;

- Radically improving

Akka.IO.Tcp’s performance and stability; and - Just this week we deployed massive performance & architecture improvements to Sdkbin - and Claude / Cursor were absolutely essential in helping us design, test, and bug-fix those.

One of the tools that’s allowed us to apply LLM-assisted coding successfully to massive code bases like Akka.NET and Sdkbin is the Model Context Protocol (MCP) - and in this post + accompanying YouTube video, we’re going explain what it is without dipping into the hyperbole you usually find on platforms like LinkedIn and X.

What Is MCP?

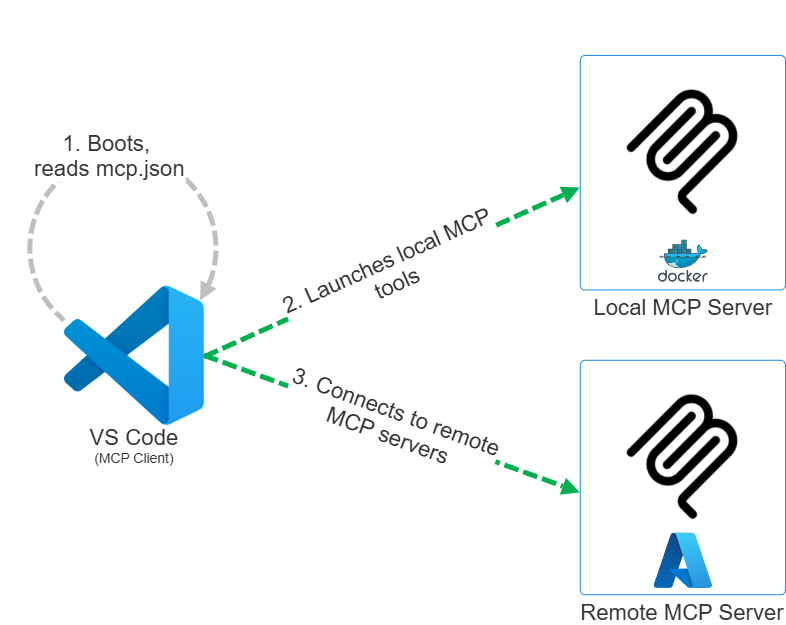

Model Context Protocol (MCP) is an interoperability standard for exposing data, tools, and applications in a portable way for LLM applications. If you write an MCP server then an MCP client like VS Code, Cursor, JetBrains Rider AI Assistant, etc should all be able to seamlessly operate with it1.

MCP servers have two modes of operation:

- Local - the MCP client will launch the MCP server executable and communicate with it via JSON-RPC over standard out;

- Remote - the MCP server is already up and running, remotely, and the MCP client will communicate with it via Server-Sent Events (SSE).

When you fire up an MCP client, like VS Code, it’ll read configuration entries from mcp.json that look like this:

{

"mcpServers": {

"postgmem": {

"url": "http://old-gpu:5000/sse"

},

"mssql": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"-e",

"MSSQL_CONNECTION_STRING",

"mssql-mcp:latest"

],

"env": {

"MSSQL_CONNECTION_STRING": "Server=host.docker.internal,1533; Database=db; User Id=test-user; Password=MyStr0NgP@assword!;TrustServerCertificate=true;"

}

},

"microsoft.docs.mcp": {

"type": "http",

"url": "https://learn.microsoft.com/api/mcp"

}

}

}

For local servers: each VS Code / Cursor / etc instance will execute the command and launch a dedicated instance of the MCP server process.

For remote servers, the MCP client will communicate with the server over HTTP streams.

What Can MCP Do to Help LLMs?

MCP can expose data, tools, and applications in a highly portable way for LLM applications.

Let’s start with two MCP servers I’ve developed:

- https://github.com/Aaronontheweb/postg-mem - a memory MCP server that uses SSE to preserve context between agent sessions. I forked this from Dario Griffo’s original and have a private fork we’re planning on OSS-ing soon too.

- https://github.com/Aaronontheweb/mssql-mcp - a local-process MCP server that allows LLMs to query SQL Server instances. This has been very useful in facilitating our major schema migrations for Sdkbin.

There are thousands of MCP servers available that enable LLMs to do all sorts of things:

- Interact with popular cloud services like GitHub, Notion, Dropbox, etc;

- Query live system status using cloud platforms, observability tools, or platforms like

kubectl; - Control headless web browsers and load testing systems;

- Store and retrieve application-specific context; and

- Thousands of other possibilities.

It really is quite powerful and you should see our companion video, “Model Context Protocol Without the Hype,” for live examples.

What MCP Is Not

MCP is not going to cure cancer or create a $1T market cap company, no matter what anyone says. All of the MCP aggregator startups that are flying around right now will be obsolete within two years.

SaaS and cloud providers will simply offer their own MCP servers hosted on public environments now that MCP supports authorization over the HTTP streaming transport.

MCP and REST APIs Are Nothing Alike

I see the question “why can’t the AI just talk directly to the HTTP APIs? Why do we need a whole separate new thing to enable LLM interactivity with these platforms?”

REST APIs are for procedural programming - strongly typed functions with strongly typed inputs yield strongly typed outputs.

LLMs, by contrast, function by synthesizing language - not by invoking methods.

MCP tools are fundamentally grammar extensions for Large Language Models - they are control surfaces that feel more like query languages (i.e. SQL) or command-line interfaces than REST APIs. These are “language models” - after all - they want to synthesize language and tokens back!

That’s really the fundamental distinction between MCP and REST - with MCP, you want to enable the LLM to string a chain of verbs and nouns together in hopes of performing some function. SQL queries and CLI invocation fit perfectly into this paradigm.

Subtleties With MCP

Some things you will not easily learn about working with MCP until you get some practice.

LLMs Will Not Call Tools by Default

Even tool-friendly language models like Claude and Qwen will not default to calling tools, preferring their own trained weights instead. Therefore, you will have to encourage the LLM to use tools in one of two ways:

- Explicitly tell the LLM to use a particular tool for some purpose in the course of your normal prompting or

- Write a core system prompt that will advise the LLM on when it’s appropriate or necessary to call a particular tool.

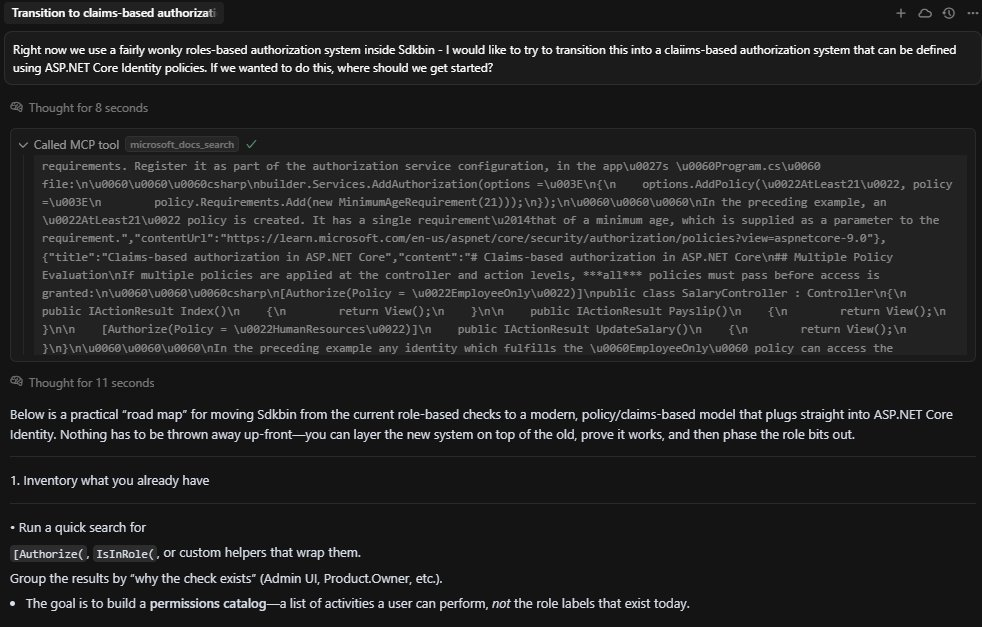

I prefer system prompts myself - here’s one I wrote for invoking the Microsoft Learn Documentation MCP server:

## Querying Microsoft Documentation

You have access to an MCP server called `microsoft.docs.mcp` - this tool allows you to search through Microsoft's latest official documentation, and that information might be more detailed or newer than what's in your training data set.

When handling questions around how to work with native Microsoft technologies, such as C#, F#, ASP.NET Core, Microsoft.Extensions, NuGet, Entity Framework, the `dotnet` runtime - please use this tool for research purposes when dealing with specific / narrowly defined questions that may occur.

The result - when I asked Claude (via Cursor) about refactoring Sdkbin’s authorization to use ASP.NET Core Identity policies, this happened:

Claude invoked the MSFT docs MCP tool automatically and started searching for relevant documentation before beginning work.

MCP Servers Should Expose a Small Number of Dynamic Tools

Each time you receive a response from the LLM, your LLM client will transmit a list of all the tools that are available for calling back to the server upon your next prompt. The larger the number of tools, the more tokens this uses - but the bigger issue here is that you don’t want to confuse the LLM by bombarding it with a large number of highly scoped, narrow tool calls2.

Instead, prefer a smaller number of dynamic tools - my SQL Server MCP exposes a total of 3 tools and I could probably get away with just the execute_sql tool.

MCP Servers Should Use LLM-Friendly Input and Output Formats

JSON is bad both as an input and as an output format for MCP tooling - its syntax is both too loose (arbitrary) and too technical (hierarchical format rather than lexical). Prefer markdown, CLIs, query languages, or natural language when you can.

Not All LLMs Are Equally Tool-Friendly

Some LLMs, like Claude and Qwen, are super tool-friendly. Others, like OpenAI’s models - are ok. And then there’s Gemini, which is really reluctant to call tools even when told to do so.

Your mileage may vary - I expect that over time this will improve now that tool calling is becoming more common, but it might take more model release cycles before your median model is consistent about leveraging tools.

Final Thoughts

The last thing I’ll mention is that developing MCP servers is a better experience than HTTP APIs in almost every conceivable way:

- Can instantly plug in to existing third-party software and get used right away;

- Minimal tooling required; and

- MCP tools can be composed dynamically by the LLMs.

The C# MCP SDK is still in an alpha state, but it’s quite good and has performed admirably for us in our production work here at Petabridge. Give it a try!

-

Not all MCP clients support SSE / remote MCP servers currently - but they’ll get there soon. ↩

-

The official GitHub MCP server is the worst offender of this that I’ve come across, at 40+ tools exposed by default. ↩

- Read more about:

- Akka.NET

- Case Studies

- Videos

Observe and Monitor Your Akka.NET Applications with Phobos

Did you know that Phobos can automatically instrument your Akka.NET applications with OpenTelemetry?

Click here to learn more.