10 Years of Building Akka.NET

Lessons learned from working on Akka.NET over the years.

It seems like just yesterday that I wrote “Akka.NET - One Year Later”; as of November 21st, 2023, Akka.NET is now ten years old.

I don’t have my original prototype Akka code anymore; all I have is Helios - the socket library I originally created to power Akka.NET’s remoting and clustering systems, which it did from 2014 until 2017. My first commit on that project dates back to Nov 21 2013. That’s when I mark this phase of my career: the Akka.NET years.

In this post I wanted to share some lessons learned from developing and maintaining one of the most ambitious, professional grade, and independent open source projects in the .NET ecosystem for over 10 years. Some of these lessons are technical; some are more business-oriented; and some are just kind of funny. In any case, I hope you find them helpful.

Akka.NET’s Resounding Success

I’ll go into some more details on the history of how Akka.NET evolved along with the .NET and cloud-native ecosystems in our “Akka.NET 10 Year Anniversary Celebration:”

The short version of that story: in 10 years we went from being a small project powered by a collaboration of enthusiasts and early adopters to being a major library that companies like Bank of America, United Airlines, Dell, ING, and more all depend upon for mission-critical business applications. We did this without the backing of venture capitalists or trillion-dollar tech giants. Our packages have been installed tens of millions of times and many of our users have introduced Akka.NET to multiple companies as they’ve progressed in their careers.

We have a lot more we’d like to accomplish over the next ten years! But first, it’s worth taking the time to reflect. How did we do it and what have we learned?

Build for Your Own Business Needs First

Back in the Fall of 2013, the original business case for Akka.NET was the marketing automation system we were developing at MarkedUp; that application would go on to become the first real Akka.NET application in the wild and certainly the first large scale distributed system built on top of it.

This acute business need is what drove me to discover the actor model and Akka in the first place: we quickly discovered that we needed scalable, in-memory server-side processing in order to deliver this low-latency, high availability product to our customers. The actor model meets these requirements quite naturally.

Because of this urgent business need we did the following:

- Formed what is now known as the Akka.NET project;

- Built the networking stack needed to create the initial versions of Akka.Remote - this was essential for us and non-trival to do; and

- Most importantly, professionalized the early versions of Akka.NET: make it consumable as a NuGet package, invest heavily in automated testing, add real build system tooling, clean up sloppy abstractions, enforce strict versioning practices in order to protect business users, and more.

The professionalization we brought to Akka.NET came about because we knew that from day one our Akka.NET application was going to be subjected to punishingly high traffic loads. We literally could not afford screw-ups on that front as they would destroy our startup on the launchpad.

There’s a tremendous difference in total product quality between libraries and frameworks built because the developers are technical enthusiasts who found the work interesting, versus built because they were essential to delivering a fully functioning product to customers. The former will work on the parts of the project that are fun and satisfying, the latter will work on the parts of the project that are important.

Adding new features is fun. Showing off new benchmarks is fun. Designing a new project logo is fun.

Writing documentation is important. Triaging bugs is important. Supporting additional platforms and use cases is important. Fixing security issues is important. Helping users learn the software is important. Planning safe and tested upgrade paths so users can migrate from older versions of the software to new ones is important.

A project only makes it to Akka.NET’s level of impact and beyond if the maintainers are rewarded by working on important things, not fun things. Building for your own business needs is the easiest way to create this self-reinforcing feedback loop.

If 3 or More Users Have the Same Problem, it’s the Product’s Problem

A heuristic I use for implementing our product roadmaps and prioritizing work is this:

Have 3 or more users from different organizations all had the same problem?

One of the accidental benefits of our Akka.NET Architecture Review services is that we get a deep look at potential Akka.NET product problems across multiple organizations.

My alarm bells go off when I start seeing customers creating home-rolled solutions for managing Akka.NET infastructure, i.e. tools that aren’t specific to their domain but rather are built explicitly for making it easier to operate Akka.NET-based systems. When I see multiple customers building the same type of tooling then clearly we have an unmet need we need to address.

We’ve followed this heuristic successfully over the years and built the following tools as a result:

- Petabridge.Cmd - there were lots of customers all rolling their own CLIs to try to manage Akka.Cluster, in particular; while I’m sure those were fun projects, we felt that this should be a concern handled by Petabridge.

- Akka.Hosting - virtually every production Akka.NET code base I’d ever reviewed had a bunch of pre-

ActorSystemstartup code responsible for injecting environment variables et al into HOCON. All of it was uniformly inelegant, ugly, and in my opinion should have been unnecessary. We made that the case after we built and shipped Akka.Hosting. - Akka.Streams.Kafka - unlike the first two items on this list, there was in fact an original Lightbend implementation of this library in Scala. We ported it over because we kept debugging issues with users writing their own custom Apache Kafka Akka.Streams stages over and over again. We decided it’d be better if the only stages we had to debug were ones we wrote and maintained with full source code access.

That’s not a complete list of course: there’s hundreds of bugs we’ve fixed over the years that all affected 3+ users.

But the rule has stood the test of time:

- It stops us from chasing ghosts in our code base that are, more often than not, the result of user error or misunderstanding;

- It stops us from implementing and maintaining niche features that no one else needs - i.e. A single user asked about FIPS compliance errors 4 years ago and we’ve never heard about it since. I’m glad we didn’t waste our time worrying about it.

- It helps us prioritize issues that are probably more widely felt than we’re aware of - usually, but not always, it takes a lot of activation energy for someone to file an issue and complain about a problem. If you have at least 3 people from distinct organizations doing it, it’s probably felt by at least 20x more organizations than are reporting it. Issues filed by single users with no other concurring reports are exceptions that prove the rule - some users sit atop “use case islands” where they really are unique.

Again, prioritize what’s important.

Users Hate SemVer; Want Upgrade Paths and Worthwhile Upgrades

I wrote an entire blog post about the impracticalities of SemVer (semantic versioning) in open source a couple of years ago and all of that still stands. Going a bit further: users hate SemVer because it creates a permission structure that enables sloppy behavior from maintainers.

Yes, not having a structured approach of any kind to introducing breaking changes into dependencies is worse than SemVer - no one wants to upgrade from Foo v1.1.0 to vFoo v1.1.1 and discover that their application no longer compiles.

However, that approach is not significantly worse than the same maintainer shipping Foo v2.0, v2.0.1, v2.1.0, v3.0.0, v3.1.0, v3.1.1, v4.0.0 all in the span of a month.

The problem isn’t communicating that there are breaking changes in this release: it’s the breaking changes themselves.

Breakings changes are sometimes necessary and when they are:

- They should be bundled together into carefully planned releases that have a big payoff for the end-user - i.e. in Akka.NET v1.5 we vastly improved the Akka.Cluster.Sharding system, the logging system, and more. Users don’t love having to plan upgrades that involve changing their own code - the less frequently they have to and the bigger the payoff, the easier it is to sell to management;

- They should have planned upgrade paths for breaking changes. we did this for Akka.NET v1.5 for both the Akka.Cluster.Sharding upgrade and the logging system upgrade and also for Akka.NET v1.4. You need to help users de-risk upgrading and having a planned, tested, and documented approach for doing so is the only definitive way to do it; and

- Ideally, breaking changes should be socialized and sold to users in advance through planned roadmap updates or communication channels like our Akka.NET Community Standups where we periodically float ideas to users.

The difference in mindset here is taking into account what it takes to make a user want to upgrade, not just telling them that there’s breaking changes in the new version. Doing this over a long period of time builds end-user trust in your engineering quality which lowers the risk perception users have about upgrading. This is crucial if you intend to build a business around open source software.

Performance is a Feature

We don’t use NBench much anymore, preferring Benchmark.NET for most of our performance measurements these days. However, some of the points I made in my original “Introducing NBench - Automated Performance Testing and Benchmarking for .NET” presentation at .NET Fringe 2016 still stand:

“Here’s where I got really frustrated [trying to find this Akka.Remote performance regression that got introduced by accident] - every single one of our contributors who cared about this issue wrote their own little benchmarks that had completely different instrumentation, completely different warmup and setup periods, etc. Everyone was trying to talk authoritatively about how fast Akka.Remote worked using completely different standards and completely different measures. The idea behind a benchmark is you have a single bench with a single mark, just like in woodworking [in order to cut wood into uniform sized pieces] - the system falls apart if everyone has their own standards.”

I’ve written numerous posts about techniques we’ve used for improving performance in Akka.NET over the years, but I want to talk more generally about how do you manage performance over a code base touched by many contributors over many releases + feature additions over a long period of time.

Be Careful About Absolute Benchmark Figures

I’ve deconstructed ASP.NET’s TechEmpower benchmark numbers on Twitter before, but Dustin Moris’ post “How fast is ASP.NET Core?” does a great job pulling the curtain back on some of the dirty tricks used to achieve some of ASP.NET’s impressive scores.

Why does anyone care? The absolute numbers are very useful for helping market the .NET platform vis-a-vis other runtimes like Java, Rust, Python, and Go. But no one will ever achieve those absolute numbers in a real-world application using ASP.NET’s values out of the box.

Ditto for when Orleans users compare Akka.NET’s performance to Orleans - those benchmarks are usually arranged to make Akka.NET run like Orleans:

- All messaging is done as request-response via

Ask<T>(Orleans is RPC-oriented) and - All messaging is done over the network to Cluster.Sharded actors (Cluster.Sharding works nearly identically to Orlean’s virtual actors).

Benchmarks are done this way because Akka.NET’s programming model is flexible and can run like Orleans; the reverse is not true.

Akka.NET is a fire-and-forget message-oriented framework that prioritizes flexibility both in terms of programming model (local, distributed, edge, embedded, etc) and state locality. Orleans is a distributed-systems first technology. Both frameworks models are different; prioritize different things; and thus, will have different performance characteristics in different scenarios. Therefore, any benchmarks comparing the two are going to have to make compromises somewhere in order to establish a measurable lowest common denominator.

What’s really useful about these comparisons for us as the maintainers of Akka.NET though is it shows where we have room to improve in these scenarios:

- What can we do to make our network stack faster and more efficient?

- How can we improve serialization?

- What can we do to improve the efficiency of Cluster.Sharding’s messaging system?

These questions all align quite well to real business value we can create for real-world Akka.NET users. Moreover, we like to compete and improve! It’s great to have multiple frameworks all competing for users and driving each other to improve. Value for users is what matters.

What’s not useful is if we went down the road of distorting our own benchmarks for the sake of producing a better absolute marketing number, at the expense of measuring real potential performance problems. For instance, if we inflated our Akka.Remote performance figures by sending back const byte as our message, without any real serialization involved, that might make our framework more impressive in our marketing materials but at the cost of actually improving the performance that impacts end-users.

The takeaway here is not to compromise on realistic end-user performance in exchange for pursuing nice-sounding benchmarks. Discover and fix real issues in your performance measurements. Lessons:

- Never introduce “benchmark modes” designed to improve performance by disabling critical functionality (i.e. bypassing serialization or real network connectivity) - you’re not solving any real problems when you do this and the numbers are misleading;

- Don’t constantly retool your benchmark in order to goose up better numbers - macro benchmarks become more valuable the longer they remain constant across releases of the software. Changing the benchmark’s functionality or measurements invalidates that historically valuable result;

- Don’t waste time worrying about what people who don’t even use your software think of it - that’s a downstream problem. Worry about solving the performance issues for people who are invested in using your software and that’ll take care of the latter; and

- Embrace competition - never sit on your laurels. Always be improving. Learn how to do it better and get after it.

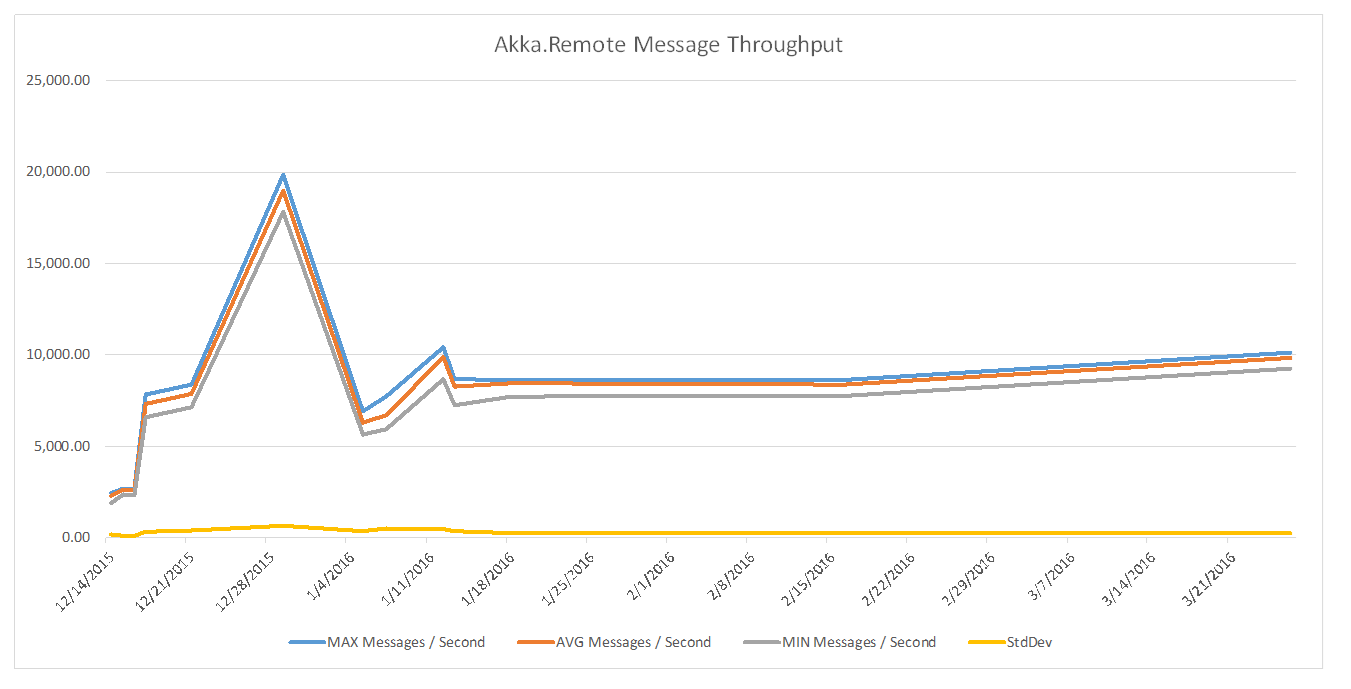

Think Data Lines, Not Data Points

It’s easy to benchmark a synchronous function with a tool like Benchmark.NET. It’s not easy to benchmark a distributed + asynchronous group of actors communicating over the network, even with a tool like Benchmark.NET, and there are many reasons for this:

- Non-determinism due to OS scheduling and other background workloads competing for CPU;

- Benchmarking tools don’t necessarily support concurrency very well in their instrumentation; and

- An issue with all benchmarks: different machines will produce very different results.

Therefore, when trying to measure performance changes it’s important that you think in terms of lines, not individual data points.

My ideal data visualization for benchmarks is a scatter plot with the ability to filter between hardware profiles: I want to see a distribution of all benchmark values taken with the same piece of hardware over a period of relevant source control commits. That will help us identify outliers (i.e. the one benchmark run ruined by Windows Defender kicking in or whatever) and observe the general trend movement of the data.

Relative Improvements over Time Add up

What’s truly most important, from the point of view of managing performance in a product like Akka.NET over time, is consistently making relative performance improvements without destroying or compromising functionality.

I gave some examples from our “2022 Akka.NET Year-in-Review and Future Roadmap” earlier this year:

The “low hanging fruit” for micro optimizations has largely been picked clean since the start of the v1.4 effort in 2020 (1.4.47 is ~50% faster than 1.4.0) - the return on investment of engineering labor simply isn’t there for the parts of Akka.NET that have received a lot of performance testing over the years (core, Akka.Remote;) there are definitely still some gains left on the table in areas that have been more relatively underserved, such as DData, Akka.Streams, and Akka.Persistence.

All of the small improvements we made to Akka.NET throughout the 51 separate v1.4 revision releases (1.4.0 through 1.4.51) resulted in a > 50% improvement for end-users without them having to change any of their code, take their cluster offline, or any other costs. That improvement resulted from continuously testing and trying new things in lots of small, easily measurable changes that add up over time.

I pulled the curtain back on some of these experiments we ran in my “.NET Systems Programming Learned the Hard Way” talk last year:

Keep doing lots of small optimizations all the time. Test, re-test, and test again.

There are Bigger Problems Than Micro-Optimizations can Solve

I’m an enormous fan of mechanical sympathy in practice: understanding how your hardware works and reorienting your software to please it with lots of small, precise changes will add up to massive performance improvements over time.

For instance, eliminating boxing of value types (structs) by using .NET generics in some high-traffic areas will reduce pressure on the garbage collector and generally reduce latency + improve throughput.

However, when dealing with something like the performance of a single Akka.Remote connection - micro-optimizations can only get you so far. In the case of Akka.Remote’s performance what we fundamentally have is a flow control issue, which I describe in detail here:

These types of performance problems can’t be solved easily through clever optimizations: these require reinventing the way fundamental parts of the framework execute, upgrading the runtimes, and probably creating breaking changes for end-users. Sometimes that’s what it takes. Be bold about solving them, but make sure the juice is worth the squeeze for users afterward.

Beware of Dependencies, Even Microsoft Ones

I can’t tell you the number of times users have implored Akka.NET to depend on some Microsoft.Extensions library or some other massively popular .NET library over the years. “Integrate directly with Microsoft.Extensions.Configuration directly in the core library!” etc.

We’re happy to take dependencies on other libraries through abstractions we can control, such as Akka.DependencyInjection, but we have to jealously guard the most heavily used parts of our libraries.

Why? Because even if a library comes from a trusted party like Microsoft, there’s no telling what direction it might take or what its longevity might look like.

Consider, for instance, all of the Azure Table SDK libraries that Akka.Persistence.Azure has had to migrate between over the years:

- WindowsAzure.Storage - up until 2021.

- Microsoft.Azure.Cosmos.Table - from 2021 to 2022.

- Azure.Data.Tables - from 2022 to 2023.

Bear in mind that the Azure Table Storage SDK in .NET is a rare profit-center library for Microsoft: development on it can be directly correlated to revenue increases. Yet despite that, the library has churned no less than three times and might still again in the future!

You don’t want to go overboard and never take any external dependencies either, but you do want to exercise caution: it’s impossible to predict how ecosystems and other individual projects may change in the future, therefore you want to do what you can to limit your exposure. Each time you take a dependency on a third party library in your own library, you’re exposing yourself to potential upstream risks / changes / et al that you’ll eventually have to propagate down to your users. Do so cautiously.

Owning “the Stack”

One debate that’s raged within the Akka.NET project over the course of its history is whether it makes sense for us to own other strongly dependent parts of our upstream stack:

- Serialization and

- Network transports.

We have done a combination of both in both of these areas:

- We outsource some serialization to Google.Protobuf and Newtonsoft.Json, while also maintaining Hyperion.

- We originally owned our own network stack with Helios, then we outsourced it to Microsoft’s DotNetty library, which was subsequently abandoned. Now we’re looking at building on top of a more durable standard like .NET’s QUIC protocol implementation in .NET 8.0.

Owning things like serializers and network transports is a lot of thankless, tireless work - hence why delegating it to someone like a FAANG company makes sense.

But it’s not a free ride. You can run into abandonment issues like DotNetty, but even worse are alignment issues. For instance: Google.Protobuf has been very slow and reluctant to adopt System.Memory constructs like Span<T> and Newtonsoft.Json will likely never adopt them.

So if Akka.NET sets “having a zero-allocation serialization pipeline” as an aspirational goal, we’re essentially dead in the water due to lack of alignment with our dependencies.

This is where making the decision to “own the stack,” or at least part of the stack, can be beneficial: it’s more work but you don’t have to work around the design decisions of another organization. Probably the best compromise here is to delegate your infrastructure to another library that is aligned with you, with the understanding that alignments can change over time.

Talk with Users Often

I don’t do as many conference appearances these days, but one of the things I always look forward to is speaking with production Akka.NET users. Back in 2015 there weren’t very many people using it in production yet, but now there are tens of thousands of developers who’ve used it in production.

It’s a trope in startup circles to say “talk to your customers” - and it’s absolutely true. The more I talk to users the more I learn about things that are important to them, other technologies they’re working with, and how Akka.NET fits into the picture for each of these different industries.

The most important things I’ve learned in these conversations are the reasons why users have trouble getting Akka.NET adopted internally: learning curve, performance, lack of relevant examples, and so on. As Bill Gates famously says: “positive feedback is not actionable.”

The Akka.NET Discord is also a great value-add in this respect, as are Akka.NET questions that pop up on GitHub Discussions or Stack Overflow. People pay for actual problems to be solved - put your ear to the ground so you can find and solve them.

Ecosystems and Platforms Change

Nothing did more to shake up Akka.NET’s early roadmap than the introduction of .NET Core in 2017 and going truly cross-platform. There’s been a tremendous ripple effect from decoupling .NET from Windows that has made Akka.NET’s target market significantly larger and more diverse across industries and use cases.

It’s really difficult to predict how and when these types of seismic shifts will happen, hence why it’s worth talking to users about them early and often. The single best reason for attending technology conferences is that the speakers consist of the ultra-early-adopter-evangelist types that will tell you about what’s coming down the pipeline.

For instance, another major shift in the .NET ecosystem that came about as a result of .NET Core was the renewed focus on performance. The introduction of System.Memory in .NET Core 3.0 had a tremendous impact that is still being widely felt throughout the entire .NET ecosystem even to this day, as it really created the possibility of zero copy operations accessible to many, many use cases.

However, this also introduces a source of alignment problems for a mature project like Akka.NET: we would love to take advantage of System.Memory, but our third party dependencies used in our networking and serialization stacks are either in a semi-abandoned state (Newtonsoft.Json, DotNetty) or are somewhat reluctant to fully embrace the capabilities of System.Memory (Google.Protobuf.)

These are just challenges that platform changes pose and they’re solvable. My colleague Gregorius already demonstrated what a modern Akka.NET serialization solution might look like (using C# Source Generators) in one of our September, 2023 Akka.NET Community Standup:

The Next 10 Years of Akka.NET

“Men make plans and God laughs,” as the saying goes. We do our best to plan and follow product roadmaps for Akka.NET but you’ll never know how ecosystems and priorities can change.

If I can share my wish list of short term things I’d like to accomplish with Akka.NET:

- Push Akka.Remote into the millions of messages per second range;

- Make all of Akka.NET AOT-friendly;

- Successfully ship our Akka.Cluster visualization + management project we’ve been working on - you may have heard us refer to it in Discord or in our Community Standups as “OpsCenter;”

- Refresh all of our documentation, tutorials, and training courses for Akka.NET; and

- Ship Akka.gRPC as a means of making it easy for non-.NET backends to freely and securely communicate with Akka.NET actors.

The next big picture item is Akka.NET 2.0, which introduces typed IActorRef in order to make it easier to discover problems with your applications during compilation rather than runtime. That will be a radical reimagination of how Akka.NET works and the “meta” for how to work with it - but all of those typed actors can still exist alongside existing Akka.NET actors and applications with no breaking changes. That’s the plan at least.

Beyond that though, I take things as they come - most of the major shifts in the technology we produce on the Akka.NET project have been in response to changes in demands, ecosystems, and needs. With the radical acceleration of “digital transformation” across many traditionally analog industries like manufacturing and energy production; the introduction of widely-available LLMs; and the continued push towards cloud services, who can tell what things will look like in five years?

Akka.NET’s adoption is growing larger and larger all the time with our rate of downloads increasing every day. Wherever the future is going, people are going to be building integral parts of it on top of Akka.NET and we’ll be right there with them building along the way.

If you liked this post, you can share it with your followers or follow us on Twitter!

- Read more about:

- Akka.NET

- Case Studies

- Videos

Observe and Monitor Your Akka.NET Applications with Phobos

Did you know that Phobos can automatically instrument your Akka.NET applications with OpenTelemetry?

Click here to learn more.